When Parallel AI first announced their Deep Research API, I was intrigued. I played around with it and thought it did a great job. Of course, I pay for ChatGPT, Claude, and Gemini so I don’t really have need for another Deep Research product.

And I’ve already built my own.

So I set Parallel aside… until last week when they announced their Search API. My go-to for search so far has been Exa but I decided to test Parallel out for a new project I’m working on with a VC client, and I’m very impressed.

The client wants a fully automated due diligence system. This isn’t to say they’re going to not do their own research but to do it all manually is tedious and takes dozens of analyst hours. In fact, many VCs skip this step altogether (which is why we see such insane funding rounds).

Part of that system is conducting market research, identifying competitors in the space, where the gaps are, and how the startup they’re interested in is positioned.

So the build I’m going to show you is a simplified version of that. Enter any startup URL and get a complete competitive analysis in a couple of minutes.

What We’re Building

In this tutorial, you’ll build a VC Market Research Agent that automates the entire due diligence process. Give it a startup URL, and it will:

1. Understand the target – Extract what the company does, who they serve, and how they position themselves

2. Find the competitors – Discover all players in the market using AI-powered search (not just the obvious ones)

3. Analyze the landscape – Deep dive into each competitor’s strengths, weaknesses, and positioning

4. Identify opportunities – Find the whitespaces where no one is competing effectively

5. Generate a report – Create an investment-ready markdown document with actionable insights

WHY Parallel?

Parallel has a proprietary web index which they’ve apparently been building for two years. The Search API is built on top of that and designed for AI. This means it isn’t optimizing for content that a human might click on (like Google does) but content that will fully answer the task the AI is given.

So their search architecture goes beyond keyword matching into semantic meaning, and they prioritize pages most relevant to their objective, rather than one optimized for human engagement.

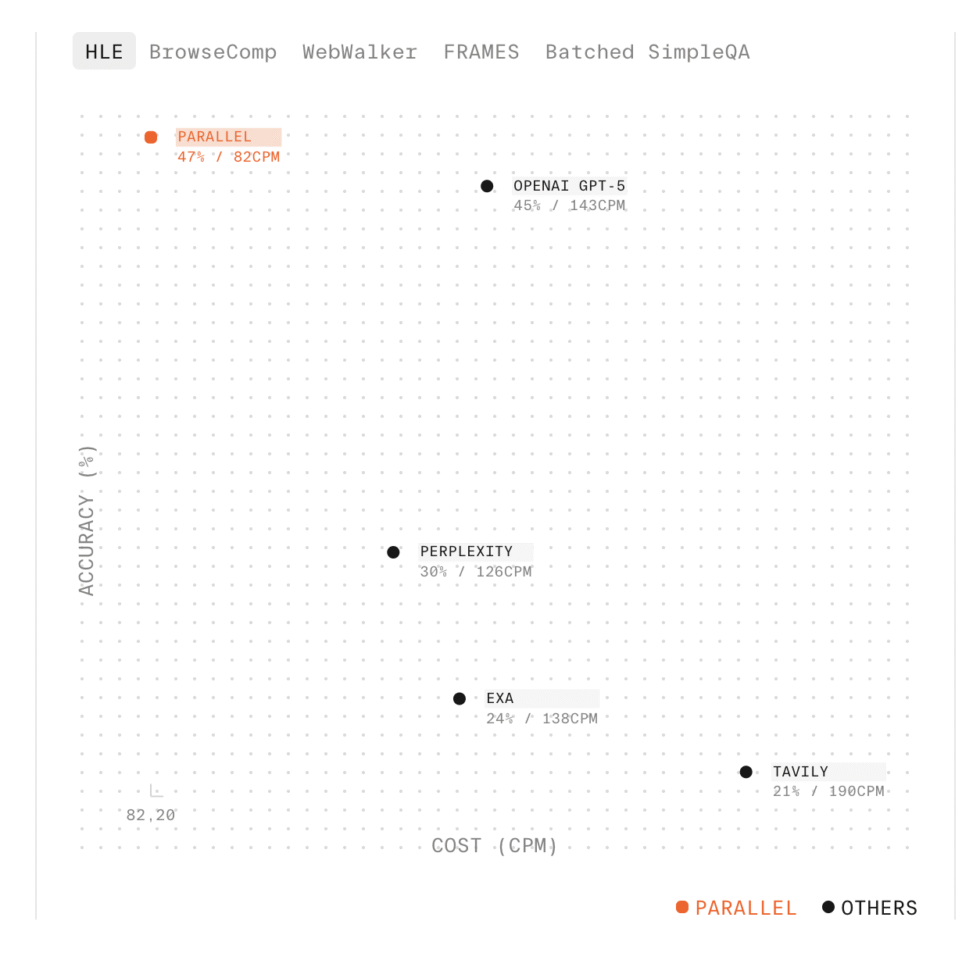

Exa is built this way too but according to the performance benchmarks, Parallel has the highest accuracy for the lowest cost.

This is why we’re using Parallel AI. It’s specifically built for complex research tasks like this, with 47% accuracy compared to 45% for GPT-5 and 30% for Perplexity, but the cost is a lot lower.

The Architecture: How This Agent Works

Here’s the mental model for what we’re building:

Startup URL → Analyze → Discover Competitors → Analyze Each → Find Gaps → ReportSimple, right? But each step needs careful orchestration. Let me break down the key components:

1. Market Discovery — The detective that finds competitors

- Uses Parallel AI’s Search API to find articles mentioning competitors

- Extracts company names from those articles

- Verifies each company has a real website (no hallucinations!)

2. Competitor Analysis — The analyst that digs deep

- Visits each competitor’s website

- Uses Parallel AI’s Extract API to pull structured information

- Identifies strengths, weaknesses, and positioning

3. Opportunity Finder — The strategist that spots whitespace

- Compares all competitors to find patterns

- Identifies what everyone does well (table stakes)

- Identifies what everyone struggles with (opportunities)

4. Report Generator — The writer that synthesizes everything

- Takes all the raw data and creates a readable narrative

- Adds an executive summary for busy partners

- Includes actionable recommendations

Now let’s build each piece.

What You’ll Need

Before we start building, grab these:

- Parallel AI API key – You get a bunch of free credits when you sign up

- OpenAI API key – We’re using GPT-4o-mini to save costs on this POC as we have a number of API calls.

- 30-60 minutes – Grab coffee, this is fun

Let’s start with the basics. Create a new directory and set up a clean Python environment, then install dependencies:

pip install requests openai python-dotenvNow create the project structure:

mkdir data reports

touch main.py market_discovery.py competitor_analysis.py report_generator.pyHere’s what each file does:

- main.py – The orchestrator that runs everything

- market_discovery.py – Uses Parallel AI’s Search API to find articles mentioning competitors, and extracts them

- competitor_analysis.py – Uses Parallel AI’s Extract API to analyze each competitor’s strengths/weaknesses

- report_generator.py – Creates the final markdown report

- data/ – Stores intermediate JSON files (useful for debugging)

- reports/ – Where your final reports go

STEP 1: Understanding the Target Startup

Now let’s get into the code. Before we can find competitors, we need to understand what the target company actually does. Sounds obvious, but you can’t just scrape the homepage and call it a day.

Companies describe themselves in marketing speak. “We’re transforming the future of enterprise cloud infrastructure with AI-powered solutions” tells you nothing useful for finding competitors.

What we need:

- Actual product description – What do they sell?

- Target market – Who buys it?

- Category – How would you search for alternatives?

- Keywords – What terms would appear on competitor sites?

Now here’s where Parallel AI’s Extract API shines. Instead of writing complex HTML parsers, we just tell it what we want and it figures out the rest.

Open `market_discovery.py` and add this:

response = requests.post(

"https://api.parallel.ai/v1beta/extract",

headers=self.headers,

json={

"urls": [url],

"objective": """Extract company information:

- Company name

- Product/service offering

- Target market/customers

- Key features or value propositions

- Industry category""",

"excerpts": True,

"full_content": True

}

)Let’s also extract this out into a JSON:

structured_response = self.openai_client.chat.completions.create(

model="gpt-4o-mini",

messages=[{

"role": "user",

"content": f"""Extract structured info from this website content:

{content}

Return JSON with:

- name: company name

- description: 2-3 sentences about what they do

- category: market category (e.g., "AI Infrastructure")

- target_market: who their customers are

- key_features: list of main features

- keywords: 5-10 keywords for finding competitors

Respond ONLY with valid JSON."""

}],

temperature=0.3

)STEP 2: Finding the Competitors

Now for the hard part: discovering every competitor in the market. This is where most tools fail. They either:

- Return blog posts instead of companies

- Miss important players

- Include tangentially related companies

- Hallucinate companies that don’t exist

We’re going to solve this with a four-step verification process. It’s more complex than a single API call, but it works reliably.

First, we’ll use the Parallel Search endpoint to find articles that talk about products in the space we’re exploring. They’ve already done the research, so we’ll piggyback on it.

We just need to give Parallel a search objective and it figures out how to do searches. Play around with the prompt here until you find something that works:

search_objective = f"""Find articles about {category} products like {name}

Focus on list articles and product comparison reviews. EXCLUDE general industry overviews."""

search_response = requests.post(

"https://api.parallel.ai/v1beta/search",

headers=self.headers,

json={

"objective": search_objective,

"search_queries": keywords,

"max_results": 5,

"mode": "one-shot"

}

)Then, we extract company names from those articles using Parallel AI’s Extract API, which pulls out relevant snippets mentioning competitors.

extract_response = requests.post(

"https://api.parallel.ai/v1beta/extract",

headers=self.headers,

json={

"urls": article_urls,

"objective": f"""Extract company names mentioned as competitors in {category}.

For each company: name, brief description, website URL if mentioned.

Focus on actual companies, not blog posts.""",

"excerpts": True

}

)As before, we use GPT-4o to parse the information out:

companies_response = self.openai_client.chat.completions.create(

model="gpt-4o-mini",

messages=[{

"role": "system",

"content": "You are a market analyst identifying DIRECT competitors. Be strict."

}, {

"role": "user",

"content": f"""Extract ONLY direct competitors to: {description}

CONTENT:

{combined_content}

Return JSON array:

[{{"name": "Company", "description": "what they do", "likely_domain": "example.com"}}]

RULES:

- Only companies with THE SAME product type

- Exclude tangentially related companies

- Limit to {max_competitors} most competitive

Respond ONLY with valid JSON."""

}],

temperature=0.3

)And finally, we verify each company has a real website. We skip LinkedIn, Crunchbase, and Wikipedia because we want the actual company website, not their company profile.

competitors = []

seen_domains = set()

for company in company_list[:max_competitors]:

search_query = f"{company['name']} {company.get('likely_domain', '')} official website"

website_search = requests.post(

"https://api.parallel.ai/v1beta/search",

headers=self.headers,

json={

"search_queries": [search_query],

"max_results": 3,

"mode": "agentic"

}

)

for result in website_search.json()["results"]:

url = result["url"]

domain = url.split("//")[1].split("/")[0].replace("www.", "")

# Skip non-company sites

if any(skip in domain for skip in ["linkedin", "crunchbase", "wikipedia"]):

continue

if domain not in seen_domains:

seen_domains.add(domain)

competitors.append({

"name": company["name"],

"website": url,

"description": company.get("description", "")

})

break

return competitorsSTEP 3: Analyzing Each Competitor

Now that we have a list of competitors, we need to analyze each one. This is where we dig deep into strengths, weaknesses, and positioning.

For each competitor, we want to know:

- What do they offer?

- Who are their customers?

- What are they good at? (strengths)

- Where do they fall short? (weaknesses)

- How do they position themselves?

- How do they compare to our target startup?

Add this to `competitor_analysis.py`:

response = requests.post(

f"{self.base_url}/v1beta/extract",

headers=self.headers,

json={

"urls": [url],

"objective": f"""Extract information about {name}:

- Product offerings and features

- Target market and customers

- Pricing (if available)

- Unique selling points

- Technology stack (if mentioned)

- Case studies or testimonials""",

"excerpts": True,

"full_content": True

}

)And now you know the drill, we call GPT-4o to parse it out:

analysis = self.openai_client.chat.completions.create(

model="gpt-4o",

messages=[{

"role": "system",

"content": "You are a VC analyst conducting competitive analysis."

}, {

"role": "user",

"content": f"""Analyze this competitor relative to our target startup.

TARGET STARTUP:

Name: {target_startup['name']}

Description: {target_startup['description']}

Category: {target_startup['category']}

COMPETITOR: {name}

WEBSITE CONTENT:

{content[:6000]}

Provide JSON with:

- product_overview: what they offer

- target_customers: who they serve

- key_features: array of main features

- strengths: array of 3-5 competitive advantages

- weaknesses: array of 3-5 gaps or weak points

- pricing_model: how they charge (if known)

- market_position: their positioning (e.g., "Enterprise leader")

- comparison_to_target: 2-3 sentences comparing to target

Be objective. Identify both what they do well AND poorly.

Respond ONLY with valid JSON."""

}],

temperature=0.4

)Step 4: Finding Market Whitespace

The hard work is done, we just need to pass all the information we’ve collected to OpenAI to analyze it and find whitespace. This is really just prompt engineering:

competitor_summary = [{

"name": comp["name"],

"strengths": comp.get("strengths", []),

"weaknesses": comp.get("weaknesses", []),

"market_position": comp.get("market_position", ""),

"features": comp.get("key_features", [])

} for comp in competitors if comp.get("strengths")]

# Identify patterns and gaps

analysis = self.openai_client.chat.completions.create(

model="gpt-4o",

messages=[{

"role": "system",

"content": "You are a senior VC analyst identifying market opportunities."

}, {

"role": "user",

"content": f"""Analyze this market for opportunities.

TARGET STARTUP:

{target_startup}

COMPETITORS:

{json.dumps(competitor_summary, indent=2)}

Return JSON with:

1. market_overview:

- total_competitors: count

- market_maturity: "emerging"/"growing"/"mature"

- key_trends: array of trends

2. competitor_patterns:

- common_strengths: what most do well

- common_weaknesses: shared gaps

- positioning_clusters: how they group

3. whitespaces: array of opportunities:

- opportunity: the gap

- why_exists: why unfilled

- potential_value: business impact

- difficulty: "low"/"medium"/"high"

4. target_startup_positioning:

- competitive_advantages: what target does better

- vulnerability_areas: where at risk

- recommended_strategy: how to win (2-3 sentences)

Respond ONLY with valid JSON."""

}],

temperature=0.5

)Step 5: Generating our report and tying it all together

Our fifth and final step is putting together the report for the Investment Committee. We already have all the content we need for the report so it’s really just a matter of formatting it the right way:

for comp in competitors:

if not comp.get("strengths"):

continue

report += f"### {comp['name']}\n\n"

report += f"**Website:** {comp['website']}\n\n"

report += f"**Overview:** {comp.get('product_overview', 'N/A')}\n\n"

report += "**Strengths:**\n"

for strength in comp.get('strengths', []):

report += f"- ✓ {strength}\n"

report += "\n**Weaknesses:**\n"

for weakness in comp.get('weaknesses', []):

report += f"- ✗ {weakness}\n"

report += f"\n**Comparison:** {comp.get('comparison_to_target', 'N/A')}\n\n"

report += "---\n\n"

# Add market whitespaces

report += "## Market Opportunities\n\n"

for opportunity in analysis.get('whitespaces', []):

report += f"### {opportunity.get('opportunity', 'Unknown')}\n\n"

report += f"**Why it exists:** {opportunity.get('why_exists', 'N/A')}\n\n"

report += f"**Potential value:** {opportunity.get('potential_value', 'N/A')}\n\n"

report += f"**Difficulty:** {opportunity.get('difficulty', 'Unknown')}\n\n"

# Add strategic recommendations

positioning = analysis.get('target_startup_positioning', {})

report += f"## Strategic Recommendations\n\n"

report += f"**Recommended Strategy:** {positioning.get('recommended_strategy', 'N/A')}\n\n"

report += f"### Competitive Advantages\n\n"

for adv in positioning.get('competitive_advantages', []):

report += f"- {adv}\n"

report += f"\n### Areas of Vulnerability\n\n"

for vuln in positioning.get('vulnerability_areas', []):

report += f"- {vuln}\n"We can also add an executive summary at the top which GPT-4 can generate for us from all the content.

response = self.openai_client.chat.completions.create(

model="gpt-4o",

messages=[{

"role": "system",

"content": "You are a VC analyst writing executive summaries."

}, {

"role": "user",

"content": f"""Write a 4-5 paragraph executive summary.

STARTUP: {startup['name']} - {startup['description']}

COMPETITORS: {len(competitors)} analyzed

ANALYSIS: {analysis.get('market_overview', {})}

Cover:

1. Market opportunity

2. Competitive dynamics

3. Key whitespaces

4. Target positioning

5. Investment implications

Professional, data-driven tone for VCs."""

}],

temperature=0.6

)

return response.choices[0].message.contentAnd finally, we can create a main.py file that calls each step sequentially and passes data along. We also save our data to a folder in case something goes wrong along the way.

# Step 1: Analyze target

print("STEP 1: Analyzing target startup")

startup_info = discovery.analyze_startup(startup_url, startup_name)

print(f"✓ Analyzed {startup_info['name']} ({startup_info['category']})\n")

# Save intermediate data

with open(f"data/startup_info_{timestamp}.json", "w") as f:

json.dump(startup_info, f, indent=2)

# Step 2: Discover competitors

print("STEP 2: Discovering competitors")

competitors = discovery.discover_competitors(

startup_info['category'],

startup_info['description'],

startup_info.get('keywords', [])

)

print(f"✓ Found {len(competitors)} competitors\n")

with open(f"data/competitors_{timestamp}.json", "w") as f:

json.dump(competitors, f, indent=2)

# Step 3: Analyze each competitor

print("STEP 3: Analyzing competitors")

competitor_details = []

for i, comp in enumerate(competitors, 1):

print(f" {i}/{len(competitors)}: {comp['name']}")

details = analyzer.analyze_competitor(

comp['website'],

comp['name'],

startup_info

)

competitor_details.append(details)

print(f"✓ Completed competitor analysis\n")

with open(f"data/competitor_analysis_{timestamp}.json", "w") as f:

json.dump(competitor_details, f, indent=2)

# Step 4: Identify market gaps

print("STEP 4: Identifying market opportunities")

market_analysis = analyzer.identify_market_gaps(

startup_info,

competitor_details

)

print(f"✓ Identified {len(market_analysis.get('whitespaces', []))} opportunities\n")

with open(f"data/market_analysis_{timestamp}.json", "w") as f:

json.dump(market_analysis, f, indent=2)

# Step 5: Generate report

print("STEP 5: Generating report")

report_path = reporter.generate_report(

startup_info,

competitor_details,

market_analysis

)When I ran it on Parallel itself, I got a really good research report along with competitors like Exa and Firecrawl, plus gaps in the market.

Extending Our System

This POC is fairly basic so I encourage you to try other things, starting with better prompts for Parallel.

For my client I’m extending this system by:

- Adding more searches. Right now I’m looking for articles but I also want to search sites like ProductHunt and Reddit, PR announcements, and more

- Enriching with founder information and funding data

- Adding visualizations to create market maps and competitive matrices

And while this is specific to VCs, there are so many other use cases that need search built-in, from people search for hiring to context retrieval for AI agents.

You can try their APIs in the playground, and if you need any help, reach out to me!