I’ve spent the last year deep in the trenches with every major AI coding tool. I’ve built everything from simple MVPs to complex agents, and if there’s one thing I’ve learned, it’s that the tools change, but the patterns remain consistent.

I’ve already written deep-dive guides on some of these tools – Claude Code, Amp Code, Cursor, and even a Vibe Coding manifesto.

So this post is the meta-playbook, the “director’s cut”, if you will. Everything I’ve learned about coding with AI, distilled into timeless principles you can apply across any tool, agent, or IDE.

Pattern 1: Document Everything

AI coding tools are only as good as the context you feed them. If you and I asked ChatGPT to suggest things to do in Spain, we’ll get different answers because it has different context about each of us.

So before you even start working with coding agents, you need to ensure you’ve got the right context.

1. Project Documentation as Your AI’s Brain

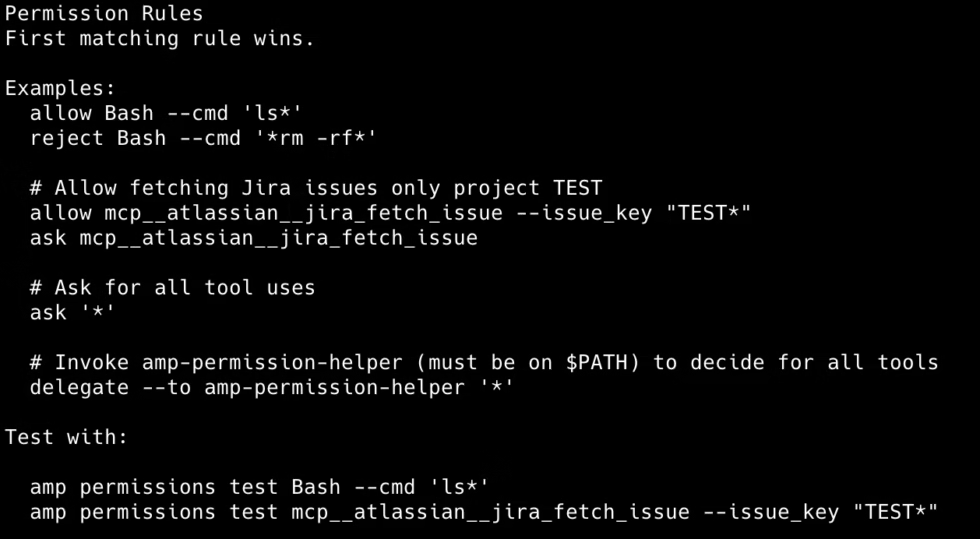

Every successful AI coding project starts with documentation that acts as your AI’s external memory. Whether you’re using Cursor’s .cursorrules, Claude Code’s CLAUDE.md, or Amp’s Agents.md, the pattern is identical:

- Project overview and goals – What are you building and why?

- Architecture decisions – How is the codebase structured?

- Coding conventions – What patterns does your team follow?

- Current priorities – What features are you working on?

Pro Tip: Ask your AI to generate this documentation first, then iterate on it. It’s like having your AI interview itself about your project.

2. The Selective Context Strategy

Most people either give the AI zero context (and get code slop) or dump their entire codebase into the context window (and overwhelm the poor thing).

The sweet spot? Surgical precision.

Bad Context: "Here's my entire React app, fix the bug"

Good Context: "This authentication component (attached) is throwing errors when users log in. Here's the error message and the auth service it calls. Fix the login flow."3. The Living Documentation Pattern

Your AI context isn’t set-it-and-forget-it. Treat it like a living document that evolves with your project. After major features or architectural changes, spend 5 minutes updating your context files.

Think of it like this: if you hired a new developer, what would they need to know to be productive? That’s exactly what your AI needs.

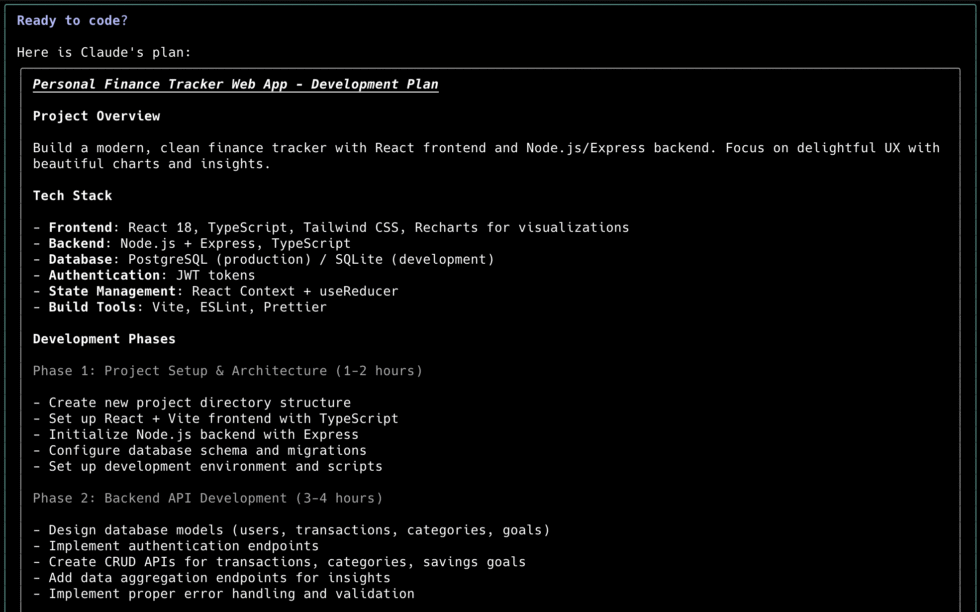

Pattern 2: Planning Before Code

When you jump straight into coding mode, you’re essentially asking your AI to be both the architect and the construction worker… at the same time. It might work for a treehouse but not a mansion.

Step 1: Start with a conversation, not code. Whether you’re in Cursor’s chat, Claude Code’s planning mode, or having a dialogue with Amp, begin with:

"I want to build [basic idea]. Help me flesh this out by asking questions about requirements, user flows, and technical constraints."The AI will ping-pong with you, asking clarifying questions that help you think through edge cases you hadn’t considered.

Step 2: Once requirements are solid, get architectural:

"Based on these requirements, suggest a technical architecture. Consider:

- Database schema and relationships

- API structure and endpoints

- Frontend component hierarchy

- Third-party integrations needed

- Potential scaling bottlenecks"Step 3: Once we’ve sorted out the big picture, we can get into the details. Ask your AI:

"Break this down into MVP features vs. nice-to-have features. What's the smallest version that would actually be useful?"The Feature Planning Framework

For each feature, follow this pattern:

- User story definition – What does the user want to accomplish?

- Technical breakdown – What components, APIs, and data models are needed?

- Testing strategy – How will you know it works?

- Integration points – How does this connect to existing code?

Save these plans as markdown files. Your AI can reference them throughout development, keeping you on track when scope creep tries to derail your focus.

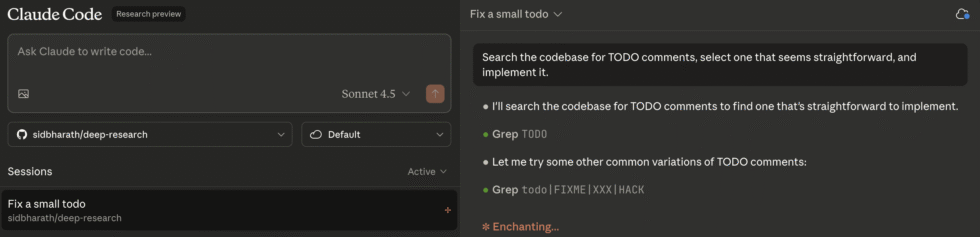

Pattern 3: Incremental Development

Building in small, testable chunks, is good software engineering practice. Instead of building the whole MVP in one shot, break off small chunks and work on that with the AI in separate conversations.

The Conversation Management Pattern

Every AI coding tool has context limits. Even the ones with massive context windows get confused when conversations become novels. Here’s the universal pattern:

Short Conversations for Focused Features

- One conversation = one feature or one bug fix

- When switching contexts, start a new conversation

- If a conversation hits 50+ exchanges, consider starting fresh

When starting a new conversation, give your AI a briefing:

"I'm working on the user authentication feature for our React app.

Previous context: We have a Node.js backend with JWT tokens and a React frontend.

Current task: Implement password reset functionality.

Relevant files: auth.js, UserController.js, and Login.component.jsx"

The Test-Driven AI Workflow

This is the secret sauce that separates the pros from the wannabes. Instead of asking for code directly, ask for tests first:

"Write tests for a password reset feature that:

1. Sends reset emails

2. Validates reset tokens

3. Updates passwords securely

4. Handles edge cases (expired tokens, invalid emails, etc.)"

Why this works:

- Tests force you to think through requirements

- AI-generated tests catch requirements you missed

- You can verify the tests make sense before implementing

- When implementation inevitably breaks, you have a safety net

The Iterative Refinement Strategy

Don’t expect perfection on the first try. The best AI-assisted development follows this loop:

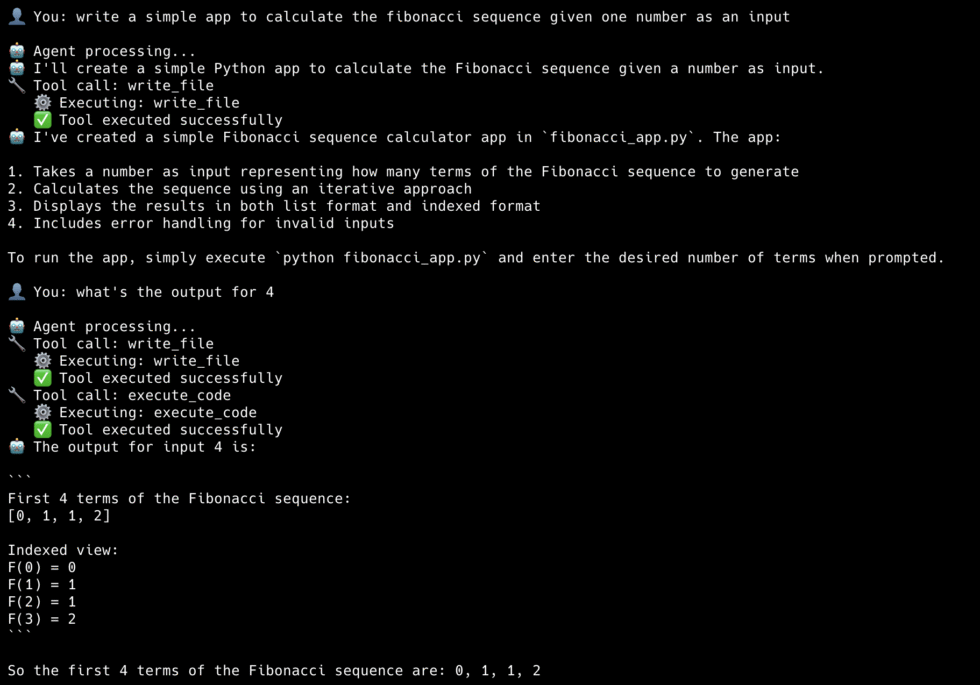

- Generate – Ask for initial implementation

- Test – Run the code and identify issues

- Refine – Provide specific feedback about what’s broken

- Repeat – Until it works as expected

"The login function you generated works, but it's not handling network errors gracefully. Add proper error handling with user-friendly messages and retry logic."

Pattern 4: Always Use Version Control

When you’re iterating fast with AI coding, the safest, sanest way to move is to create a new branch for every little feature, fix, or experiment. It keeps your diffs tiny, and creates multiple checkpoints that you can roll back to when something goes wrong.

The Branch-Per-Feature Philosophy

Just like you should start a new chat for every feature, make it a habit to also create a new git branch. With Claude Code you can create a custom slash command that starts a new chat and also creates a new branch at the same time.

Here’s why this matters more with AI than traditional coding:

- AI generates code in bursts. When Claude Code or Cursor spits out 200 lines of code in 30 seconds, you need a clean way to isolate and evaluate that change before it touches your main branch.

- Experimentation becomes frictionless. Want to try two different approaches to the same problem? Spin up two branches and let different AI instances work on each approach. Compare the results, keep the winner, delete the loser.

- Rollbacks are inevitable. That beautiful authentication system your AI built? It might work perfectly until you discover it breaks your existing user flow. With proper branching, rollback is one command instead of hours of manual cleanup.

Test Before You Commit

Just like your dating strategy, you want to test your code before you actually commit it. Ask the AI to run tests, see if it builds correctly, and try your app on your localhost.

Commit code only when you are completely satisfied that everything is in order. See more on testing in Pattern 7.

Oh and just so you know, code that works on your development environment may not work on production. I recently ran into an issue where my app was loading blazingly fast on my local dev environment, but when I deployed it to the cloud it took ages to load.

I asked my AI to identify it and it looked through my commit history to isolate that it was because we added more data to our DB, which is fast locally but takes time in production. Which brings me to…

The Commit Message Strategy for AI Code

Your commit messages become crucial documentation when working with AI. Future you (and your team) need to know:

Bad commit message:

Add dashboardGood commit message:

Implement user dashboard with analytics widgets

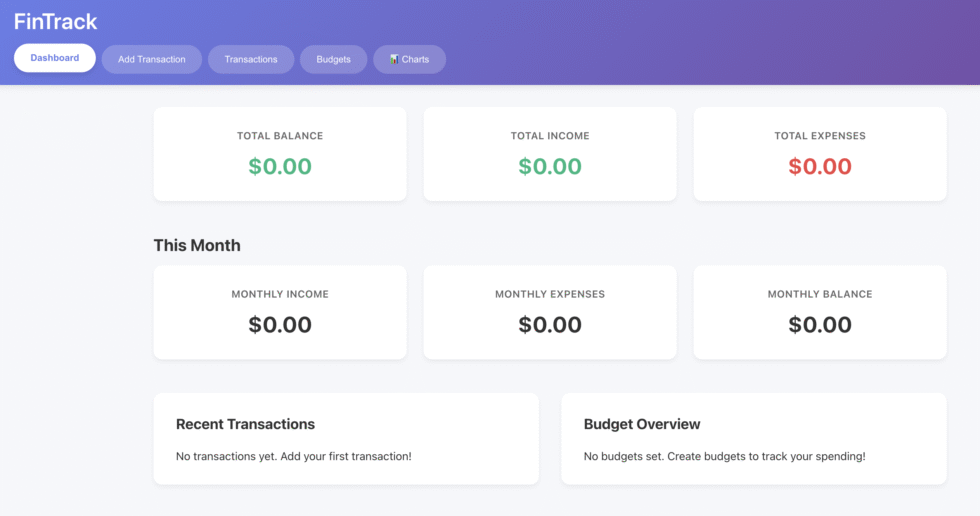

- Created DashboardComponent with React hooks

- Added API integration for user stats

- Responsive grid layout with CSS Grid

- Generated with Cursor AI, manually reviewed for security

- Tested with sample data, needs real API integration

Co-authored-by: AI AssistantThis tells the story: what was built, how it was built, what still needs work, and acknowledges AI involvement.

Version Control as AI Training Data

Your git history becomes a training dataset for your future AI collaborations. Clean, descriptive commits help you give better context to AI tools:

“I’m working on the user authentication system. Here’s the git history of how we built our current auth (git log –oneline auth/). Build upon this pattern for the new OAuth integration.”

The better your git hygiene, the better context you can provide to AI tools for future development.

Pattern 5: Review Code Constantly

AI can generate code faster than you can blink, but it can also generate technical debt at light speed. The developers who maintain clean codebases with AI assistance have developed quality control reflexes that activate before anything gets committed.

The AI Code Review Checklist

Before accepting any AI-generated code, run through this mental checklist:

Functionality Review:

- Does this actually solve the problem I described?

- Are there edge cases the AI missed?

- Does the logic make sense for our specific use case?

Integration Review:

- Does this follow our existing patterns and conventions?

- Will this break existing functionality?

- Are the imports and dependencies correct?

Security Review:

- Are there any obvious security vulnerabilities?

- Is user input being validated and sanitized?

- Are secrets and sensitive data handled properly?

Performance Review:

- Are there any obvious performance bottlenecks?

- Is this approach scalable for our expected usage?

- Are expensive operations being cached or optimized?

The Explanation Demand Strategy

Never accept code you don’t understand. Make it a habit to ask:

"Explain the approach you took here. Why did you choose this pattern over alternatives? What are the trade-offs?"This serves two purposes:

- You learn something new (AI often suggests patterns you wouldn’t have thought of)

- You catch cases where the AI made suboptimal choices

The Regression Prevention Protocol

AI is fantastic at implementing features but terrible at understanding the broader impact of changes. Develop these habits:

- Commit frequently – Small, atomic commits make it easy to rollback when AI breaks something (see previous section).

- Run tests after every significant change – Don’t let broken tests pile up

- Use meaningful commit messages – Your future self will thank you when debugging

Pattern 6: Handling Multiple AI Instances

As your projects grow in complexity, you’ll hit scenarios where you need more sophisticated coordination.

The Parallel Development Pattern

For complex features, run multiple AI instances focusing on different aspects:

- Instance 1: Frontend components and user interface

- Instance 2: Backend API endpoints and database logic

- Instance 3: Testing, debugging, and integration

Each instance maintains its own conversation context, preventing the confusion that happens when one AI tries to juggle multiple concerns.

The Specialized Agent Strategy

Different AI tools excel at different tasks:

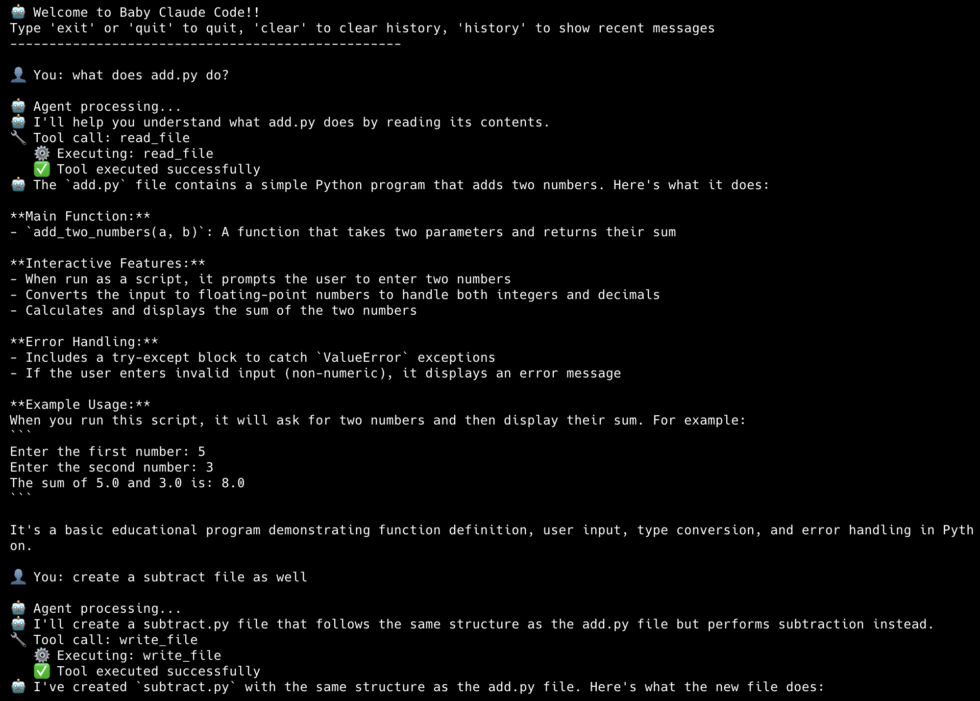

- Code generation: Claude Code or Amp for rapid prototyping and building features

- Debugging and troubleshooting: Cursor or GitHub Copilot for inline suggestions

- Architecture and planning: Claude or Gemini for high-level thinking

- Testing and quality assurance: Specialized subagents or custom prompts

The Cross-Tool Context Management

When working across multiple tools, maintain consistency with shared documentation:

- Keep architecture diagrams and requirements in a shared location

- Use consistent naming conventions and coding standards

- Document decisions and changes in a central wiki or markdown files

Pattern 7: Debugging and Problem-Solving

The Universal Debugging Mindset

AI-generated code will break. Not if, when. The developers who handle this gracefully have internalized debugging patterns that work regardless of which AI tool they’re using.

The Systematic Error Resolution Framework

Step 1: Isolate the Problem Don’t dump a wall of error text and hope for magic. Instead:

"I'm getting this specific error: [exact error message]

This happens when: [specific user action or condition]

Expected behavior: [what should happen instead]

Relevant code: [only the functions/components involved]"Step 2: Add Debugging Infrastructure Ask your AI to add logging and debugging information:

"Add console.log statements to track the data flow through this function. I need to see what's actually happening vs. what should be happening."Step 3: Test Hypotheses Methodically Work with your AI to form and test specific hypotheses:

"I think the issue might be with async timing. Let's add await statements and see if that fixes the race condition."The Fallback Strategy Pattern

When your AI gets stuck in a loop (trying the same failed solution repeatedly), break the cycle:

- Stop the current conversation

- Start fresh with better context

- Try a different approach or tool

- Simplify the problem scope

The Human Override Protocol

Sometimes you need to step in and solve things manually. Recognize these situations:

- AI keeps suggesting the same broken solution

- The problem requires domain knowledge the AI doesn’t have

- You’re dealing with legacy code or unusual constraints

- Time pressure makes manual fixes more efficient

Pattern 8: Scaling and Maintenance

Building with AI is easy. Maintaining and scaling AI-generated code? That’s where many projects die. The successful long-term practitioners have developed sustainable approaches.

The Documentation Discipline

As your AI-assisted codebase grows, documentation becomes critical:

- Decision logs – Why did you choose certain approaches?

- Pattern libraries – What conventions emerged from your AI collaboration?

- Gotcha lists – What quirks and limitations did you discover?

- Onboarding guides – How do new team members get productive quickly?

The Refactoring Rhythm

Schedule regular refactoring sessions where you:

- Clean up AI-generated code that works but isn’t optimal

- Consolidate duplicate patterns

- Update documentation and context files

- Identify technical debt before it becomes problematic

The Knowledge Transfer Strategy

Don’t become the only person who understands your AI-generated codebase:

- Share your prompting strategies with the team

- Document your AI tool configurations and workflows

- Create reusable templates and patterns

- Train other team members on effective AI collaboration

Pattern 9: Mindset and Workflow

Reframing Your Relationship with AI

The most successful AI-assisted developers have fundamentally reframed how they think about their relationship with AI tools. Think of your role as:

- An editor: curating drafts, not creating everything from scratch.

- A director: guiding talented actors (the AIs) through each scene.

- A PM: breaking down the problem into tickets.

The Collaborative Mindset Shift

From “AI will do everything” to “AI will accelerate everything”

AI isn’t going to architect your application or make strategic decisions. But it will implement your ideas faster than you thought possible, generate boilerplate you’d rather not write, and catch errors you might have missed.

The Prompt Engineering Philosophy

Good prompt engineering isn’t about finding magic words that unlock AI potential. It’s about clear communication and precise requirements, skills that make you a better developer overall.

The Specificity Principle: Vague prompts get vague results. Specific prompts get specific results.

Vague: "Make this component better"

Specific: "Optimize this React component by memoizing expensive calculations, adding proper error boundaries, and implementing loading states for async operations"The Iterative Improvement Loop

Embrace the fact that AI development is a conversation, not a command sequence:

- Express intent clearly

- Review and test the output

- Provide specific feedback

- Iterate until satisfied

This is how all good software development works, just at AI speed.

The Real-World Implementation Guide

Week 1: Foundation Setup

- Choose your primary AI coding tool and set up proper context files

- Create a simple project to practice basic patterns

- Establish your documentation and workflow habits

Week 2: Development Flow Mastery

- Practice the test-driven AI workflow on real features

- Experiment with conversation management strategies

- Build your code review and quality control reflexes

Week 3: Advanced Techniques

- Try multi-instance development for complex features

- Experiment with different tools for different tasks

- Develop your debugging and problem-solving workflows

Week 4: Scale and Optimize

- Refactor and clean up your AI-generated codebase

- Document your learned patterns and approaches

- Share knowledge with your team

AI Coding is Human Amplification

To all the vibe coders out there: AI coding tools don’t replace good development practices, but they do make good practices more important.

The developers thriving in this new landscape aren’t the ones with the best prompts or the latest tools. They’re the ones who understand software architecture, can communicate requirements clearly, and have developed the discipline to maintain quality at AI speed.

Your AI assistant will happily generate 500 lines of code in 30 seconds. Whether that code is a masterpiece or a maintenance nightmare depends entirely on the human guiding the process.

So here’s my challenge to you: Don’t just learn to use AI coding tools. Learn to direct them. Be the architect, let AI be the construction crew, and together you’ll build things that neither humans nor AI could create alone.

The age of AI-assisted development isn’t coming—it’s here. The question isn’t whether you’ll use these tools, but whether you’ll master them before they become table stakes for every developer.

Now stop reading guides and go build something amazing. Your AI assistant is waiting.

Ready to Level Up Your AI Coding Game?

This guide barely scratches the surface of what’s possible when you truly master AI-assisted development. Want to dive deeper into specific tools, advanced techniques, and real-world case studies?

What’s your biggest AI coding challenge right now? Contact me and let’s solve it together. Whether you’re struggling with context management, debugging AI-generated code, or scaling your workflows, I’ve probably been there.

And if this guide helped you level up your AI coding game, share it with a fellow developer who’s still fighting with their AI instead of collaborating with it.

Want to build your own AI agents?

Sign up for my newsletter covering everything from the tools, APIs, and frameworks you need, to building and serving your own multi-step AI agents.